Innovative artefact elimination and source localization-based feature extraction for EEG BCI pipelines

In the thriving realm of neuroscience, Brain-Computer Interfaces (BCI) have emerged as a groundbreaking technology that facilitates direct communication between the human brain and external devices or applications. By harnessing the power of neural signals, BCI have the potential to revolutionize various domains, including healthcare, assistive technology, and human-computer interaction. Multiple recording modalities are available for capturing brain activity. Among them, electroencephalography (EEG) devices stand out as a wearable, comfortable, and cost-effective solution that provides high temporal resolution to non-invasively monitor basic user intentions.

This research is dedicated to the rigorous investigation of motor imagery, with a specific focus on discerning patterns associated with grasping movements of the right and left hands. The ultimate objective is to achieve the highest possible classification accuracy of user intent without the need for neurofeedback or additional indirect information. The limitations of EEG in terms of signal-to-noise ratio and spatial resolution necessitate the use of source localization techniques, which can unveil the intricate dynamic interactions and connections within the brain. To easily distinguish between existing algorithms and the new ones introduced in this research, the original acronyms and appellations will be noted in bold.

Ensuring the accuracy and reliability of EEG data analysis requires the homogeneity of electrode functioning and the cleanliness of signals in the vast majority of cases. This entails addressing two primary objectives: eliminating statistically significant (and consequential) artifacts and rectifying any malfunctioning electrodes. The spontaneous blink represents a major physiological disturbance, occurring simultaneously with the neural signal of interest with an average probability of 10%.

These considerations led to the design of a multi-modal dataset targeted at comprehensively recording every aspect of the blinks using EEG, electrooculography, electromyography, eye tracker, and high-speed camera. Additional paradigms, including motor execution, P300, and steady-state visual evoked potentials have also been investigated due to their relevance in inferring a comprehensive understanding of the underlying neural mechanisms during MI.

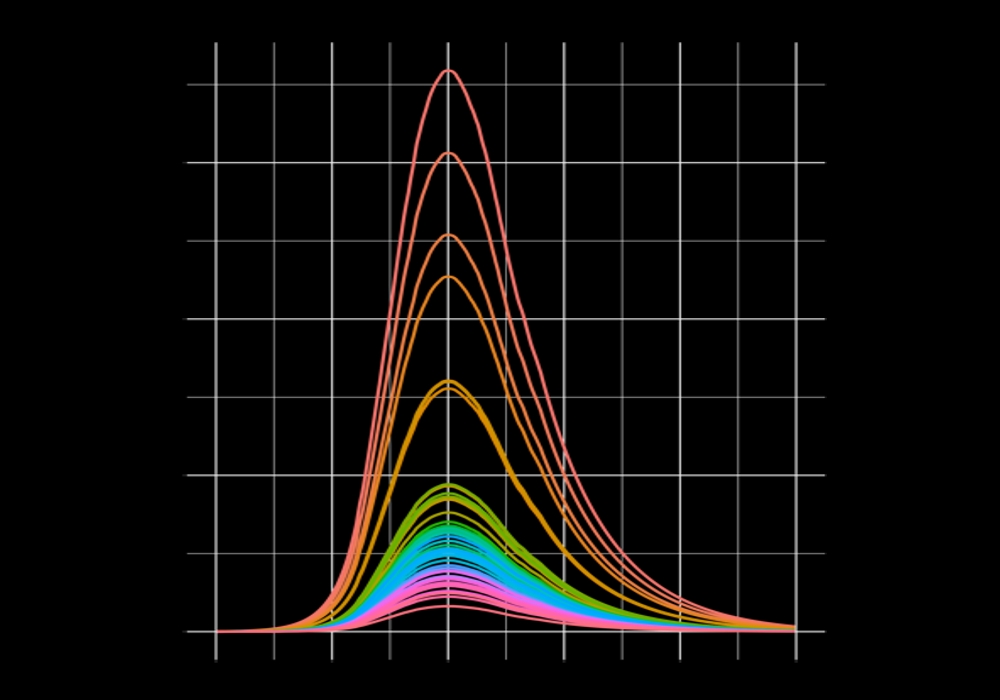

The Fitted Distribution Monte Carlo (FDMC) simulation is first conducted for a priori sample size estimation during a prospective power analysis. The goal is to ensure that sufficient data will be collected to truly comprehend the intricacies of the cortical phenomenon. FDMC demonstrates its superiority by lowering the sample size requirement by 35% compared to the Normal distribution (and by seven times compared to traditional power tables). FDMC is of particular interest when only limited resources or time are available.

The resulting multi-modal dataset facilitated the development of a robust model for accurately classifying and correcting blink artifacts as well as removing bad channels from the EEG data. The Adaptive Blink Correction and De-drifting (ABCD) algorithm has proved to improve the overall data quality and displays significantly better results than the state-of-the-art, i.e., Independent Component Analysis (ICA), or Artifact Subspace Reconstruction (ASR). The classification accuracy, along with its confidence interval at 95% confidence level, reveals a mean classification accuracy of 93.81% [74.81%; 98.76%] for ABCD against 79.29% [57.41%; 92.89%] for ICA or 84.05% [62.88%; 95.31%] for ASR.

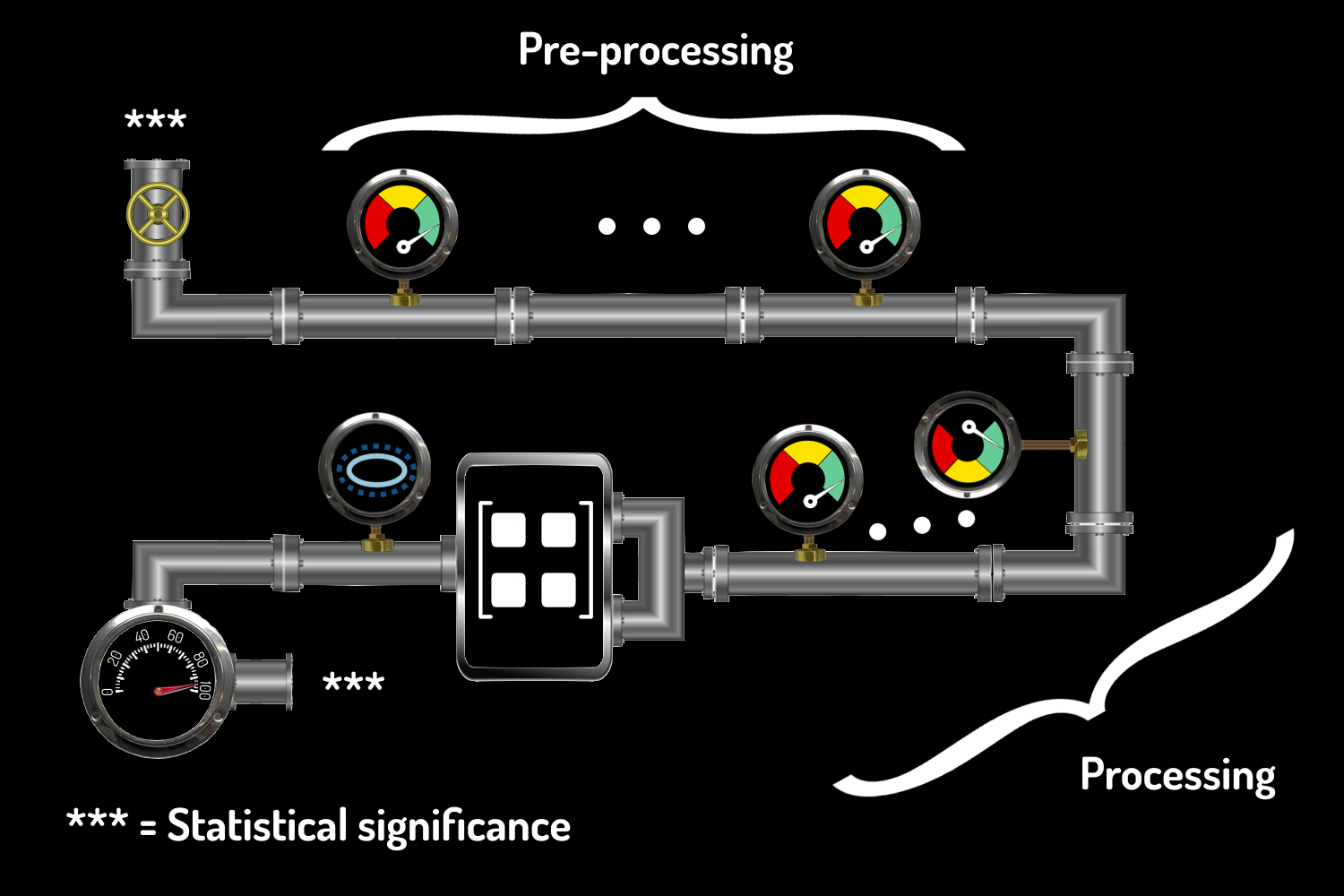

A combination of various innovative algorithms is implemented to extract the most prominent signal of interest (SOI) across the temporal, spatial, and frequential domains. Source localization is first computed on the ABCDcleaned EEG data. The Source Localized Spatio-Temporal (SLST) features approach is applied to capture the spatio-temporal characteristics of the SOI by analyzing the similarities across multiple trials. To further enhance the classification process, a Dual Classifier (DC) is implemented, utilizing both the spatial locations and time-derived covariance matrices from the SLST feature extraction algorithm. The class-specific Fréchet means, along with all other covariance matrices, are computed at the Core Channel Selection, which is determined based on a comprehensive meta-analysis.

Comparisons across different frequency bands (delta, theta, alpha, and beta), filter types (Butterworth, Chebyshev, and Elliptic), and re-referencing, e.g., Common Average Reference, Large and Small Laplacian spatial filters, are also carried out to reveal the optimized combination of all these processing steps. To ensure a fair comparison, all data are first pre-processed with ABCD, re-referenced with the Large Laplacian filter and filtered with a beta pass-band Butterworth filter, as this combination of various filters proved to yield the best accuracy.

A new introduced Consistency computation serves to validate classifiers’ stability and can thus be used to confirm the hierarchy between them, revealing that our SLST+DC method yields a mean classification accuracy of 95.03% (SD = 3.41%). The commonly employed Common Spatial Pattern (CSP) coupled with Linear Discriminant Analysis (LDA) yields 89.16% (SD = 1.76%) or 88.99% (SD = 1.60%) when coupled with Support Vector Machine (SVM). Comparisons were also made with newer methods such as the Minimum Distance to Riemannian Mean (MDRM) that achieved 81.13% (SD = 4.64%) and Tangent Space (TS) 86.09% (SD = 4.48%). All the results issued from the various pipelines are then compared using their confusion matrices.

More generally, confusion matrices (CM) consist of two independent samples (positive and negative), each following a Binomial distribution. To extend their utility, the confidence intervals (CI) are calculated for the probabilities associated with each sample. A complementary estimation of the minimum sample size is presented based on the Distance Separation (DS) method relying on the chosen CI approximation. DS can serve as a viable alternative to FDMC for experimental design, specifically aimed at evaluating the statistical distinction between two classifiers possessing known accuracies. Finally, a method to estimate the accuracy though standard deviation of CI (SDCI) is presented and applied to visual representations of CM, called Confusion Ellipses (CE).

Comparisons with PyCM, a Python library dedicated to CM evaluation and comparison, shows that their computations seem to underestimate the CI mean widths around zero compared to SDCI. Various CI approximations are also tested to assess the influence of the CI approximation choice and can serve as reference when designing a new experiment with the goal of comparing two pipelines with known global classification accuracies. The applicability of these novel methodologies (i.e., SDCI, DS, CE) extends far beyond the confines of the BCI field, encompassing a wide range of domains and disciplines.